Integrate Rails logs with Elasticsearch, Logstash, and Kibana in Docker Compose

In this post I’ll share an example Docker Compose configuration to integrate Rails logs with Elasticsearch, Logstash, and Kibana.

I installed my Docker dependencies via Brew on OSX.

$ brew list --versions | grep -i docker

docker 1.12.5

docker-compose 1.10.0

docker-machine 0.9.0

docker-machine-nfs 0.4.1

# [optional] create VirtualBox docker-machine with increased resources

docker-machine create --virtualbox-memory "4096" --virtualbox-disk-size "40000" -d virtualbox docker-machine

# [optional] enable NFS support

docker-machine-nfs docker-machineInitial Rails setup

# rvm files

echo docker_rails_logstash > .ruby-gemset

echo ruby-2.3.3 > .ruby-version

cd .

gem install rails

rails -v

Rails 5.0.1

# new project with postgresql connection

rails new . --api -d postgresql

# init database

rake db:create && rake db:migrateUpdate Rails configuration to use environment variables. This enables Docker Compose to pass container hostnames and credentials.

1) Edit file: Gemfile, add: gem 'dotenv-rails'. Execute: bundle install.

2) Create new dotenv file: .env.development, contents:

POSTGRESUSER=pguser

POSTGRESPASS=pgpass

POSTGRESHOST=localhost

LOGSTASH_HOST=localhost3) Update config/database.yml to use ENV variables:

default: &default

adapter: postgresql

encoding: unicode

host: <%= ENV.fetch('POSTGRESHOST', 'localhost') %>

password: <%= ENV.fetch('POSTGRESPASS', 'pgpass') %>

pool: <%= ENV.fetch("RAILS_MAX_THREADS") { 5 } %>

username: <%= ENV.fetch('POSTGRESUSER', 'pguser') %>Next I added some basic controller routes, edit file: config/routes.rb

Rails.application.routes.draw do

root to: 'api/pages#index'

namespace :api do

%w(bob loblaw law blog).each do |name|

get "/#{name}", to: "pages##{name}"

end

end

endAnd the corresponding controller, new file: app/controllers/api/pages_controller.rb. The controller simply responds in JSON with the passed params.

class Api::PagesController < ApplicationController

def index

render_params

end

def bob

render_params

end

def loblaw

render_params

end

def law

render_params

end

def blog

render_params

end

private

def render_params

render json: params

end

endRails logging configuration

Add gems to output JSON logs and integrate with Logstash, edit file: Gemfile

gem 'lograge'

gem 'logstash-event'

gem 'logstash-logger'Execute: bundle install to install the new gems.

Configure logging gems, edit: config/application.rb

module DockerRailsLogstash

class Application < Rails::Application

# ...snip...

config.lograge.enabled = true

config.lograge.formatter = Lograge::Formatters::Logstash.new

config.lograge.logger = LogStashLogger.new(type: :udp, host: ENV['LOGSTASH_HOST'], port: 5228)

end

endDocker compose integration

First I created a simple Dockerfile using the latest Ruby repo, new file: Dockerfile

FROM ruby:2.3.3

RUN apt-get update -qq && apt-get install -y build-essential

# node/npm

RUN apt-get install -y nodejs npm

ENV APP_HOME /rails

WORKDIR $APP_HOMENext I defined the Docker compose file to include all the services, expose ports, copy configuration files, and utilize more persistent volumes. new file: docker-compose.yml

version: '2'

services:

elasticsearch:

image: elasticsearch:latest

ports:

- '9200:9200'

- '9300:9300'

volumes:

- elasticsearch:/usr/share/elasticsearch/data

kibana:

image: kibana:latest

ports:

- '5601:5601'

logstash:

command: logstash -f /etc/logstash/conf.d/logstash.conf

image: logstash:latest

ports:

- '5000:5000'

depends_on:

- elasticsearch

volumes:

- ./config/docker-logstash.conf:/etc/logstash/conf.d/logstash.conf

nginx:

command: /docker/docker-start-nginx

depends_on:

- rails

environment:

- WORKER_PROCESSES=2

image: nginx:latest

ports:

- '80:80'

- '443:443'

volumes:

- ./bin/docker-start-nginx:/docker/docker-start-nginx

- ./config/docker-nginx.conf.template:/docker/nginx.conf.template

- ./config/docker-nginx.rails.conf:/etc/nginx/conf.d/rails.conf

postgres:

image: postgres:latest

ports:

- '5432:5432'

volumes:

- postgres:/var/lib/postgresql/data

rails:

build: .

command: bin/docker-start-rails

environment:

- ELASTICSEARCH_HOST=elasticsearch

- LOGSTASH_HOST=logstash

- POSTGRESHOST=postgres

- POSTGRESPASS=

- POSTGRESUSER=postgres

- RAILS_ENV=development

ports:

- '3000:3000'

volumes:

- .:/rails

- bundle:/usr/local/bundle

depends_on:

- postgres

volumes:

elasticsearch: {}

bundle: {}

postgres: {}Docker bin script used to start nginx, new file: bin/docker-start-nginx

#!/bin/sh

set -x

if [ -f '/etc/nginx/conf.d/default.conf' ]; then

rm /etc/nginx/conf.d/default.conf

fi

envsubst '$WORKER_PROCESSES' < /docker/nginx.conf.template > /etc/nginx/nginx.conf

nginx -g 'daemon off;'Docker bin script used to start rails, new file: bin/docker-start-rails

#!/bin/sh

set -x

gem install bundler

bundle check || bundle install

rake db:create

rake db:migrate

bundle exec puma -C config/puma.rbDocker Logstash config, new file: config/docker-logstash.conf

input {

udp {

host => "0.0.0.0"

port => 5228

codec => json_lines

}

}

output {

elasticsearch {

hosts => ["elasticsearch:9200"]

codec => json_lines

}

stdout {

codec => json_lines

}

}Docker nginx config, new file: config/docker-nginx.conf.template

user nginx;

worker_processes ${WORKER_PROCESSES};

error_log /var/log/nginx/error.log;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

}Docker nginx config to reverse proxy to Rails/Puma, new file: config/docker-nginx.rails.conf

upstream puma {

server rails:3000;

}

server {

listen 80;

server_name _;

error_page 500 502 503 504 /500.html;

location / {

try_files $uri @puma;

}

location @puma {

proxy_pass http://puma;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $http_host;

proxy_redirect off;

}

}Docker

Build and start docker compose cluster:

docker-compose build

docker-compose upThe app should now be accessible on port 80 through nginx.

open "http://`docker-machine ip docker-machine`"I created a simple Rake task to simulate traffic, new file: lib/tasks/simulate.rake

namespace :simulate do

desc 'Simulate traffic'

task traffic: :environment do

require 'open-uri'

routes = Rails.application.routes.routes.map do |route|

path = route.path.spec.to_s

if path =~ /api/

path.split('(').first

end

end.compact

loop do

url = "http://localhost:3000#{routes.sample}"

puts url

open(url).read

end

end

endI executed the rake task from my host system for a while via: docker exec -it dockerrailslogstash_rails_1 rake simulate:traffic

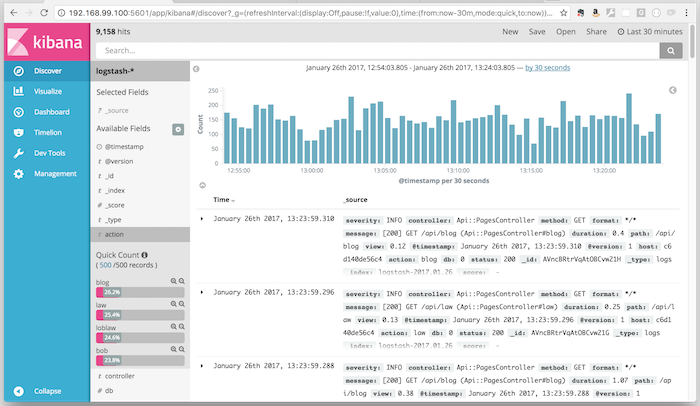

Opened Kibana to view logs.

open "http://`docker-machine ip docker-machine`:5601"